Dr. Fadhil Hidayat, S. Kom., M. T.

STEI-ITB

Prof. Dr. Ir. Suhono Harso Supangkat, M. Eng.

STEI-ITB

Ir. Budiman Dabarsyah, M.S.EE.

STEI-ITB

Abstract

Facial expression recognition, a vital aspect of human communication, is increasingly pivotal in the era of digital advancement. This research presents an innovative approach to facial expression recognition using the Xception model and advanced smoothing algorithms. The model is trained using transfer learning to enhance its accuracy in identifying emotions like happiness, sadness, anger, surprise, disgust, and fear. The integration of smoothing algorithms further improves the system’s reliability by reducing noise and fluctuations in the detected emotions. The system has shown promising results with an accuracy of 71% and future work aims to improve accuracy and explore its application in customer satisfaction analysis. It is hoped that this system will be able to aiming to improve event experiences and customer engagement in various sectors, such as education, technology, health, etc..

Keyword: Emotion Recognition, Xception, Transfer Learning, Smoothing Algorithms.

Introduction

Emotion recognition technology, which interprets human emotions through digital interfaces, is becoming essential in sectors like retail, healthcare, and entertainment to enhance customer engagement and satisfaction. By analyzing facial expressions in real time, businesses can gain valuable insights and tailor their interactions, surpassing traditional feedback methods like surveys. This study explores an emotion recognition system using video analysis to assess customer satisfaction at events, providing immediate feedback and enabling timely adjustments. This technology has the potential to revolutionize customer engagement across various industries.

Research Method

Figure 1 explains the stages of the method used to build the system. The training model uses the FER-2013 dataset. The Xception model, integrated with FaceNet and DLIB, which is well-known for its accuracy and feature extraction capabilities, is used with transfer learning to utilize the pre-trained weights for emotion detection. The model is then used for inference implementation using real-time video input. The system will recognize a person’s emotions and divide them into three emotion classes.

Discussion & Result

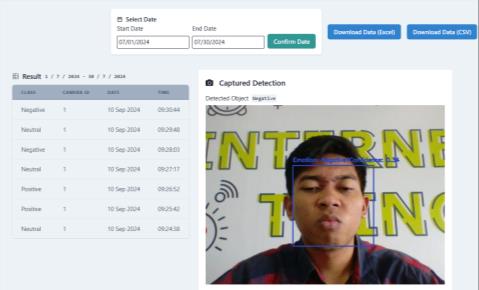

The developed system successfully recognizes facial emotions and divides them into three classes, namely positive, neutral, and negative as shown in Figure 2. The emotion that meets the Positive criteria is happy. The Negative criteria consist of the disgust, sad, fear and angry classes, while the Neutral ones consist of neutral and surprised. The confusion matrix is used to evaluate the model on the system as shown in Table 1. The model has a fair good accuracy of 71.1%. This is in line with other evaluation components, including precision of 72.1%, recall of 71.1%, and F1-score of 71.4%. The detection results are then displayed in the dashboard as in Figure 3.

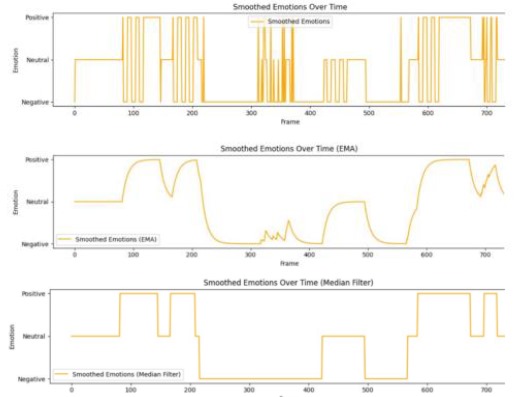

A challenge arises when inferring facial expressions due to flickering changes in expression classes. This study implements a smoothing algorithm to make recognition more stable. There are 3 candidate algorithms to be used, namely Kalman Filter, Moving Average, and Median Filter as shown in Figure 4. The results of the graph show changes in recognition classes compared to frames. It can be seen that Moving Average and Median Filter are smoother and more suitable for use than Kalman Filter which is very volatile in recognition class shifts.

Conclusion

This paper presents a video analytics-based emotion recognition system that combines the feature extraction capabilities of the Xception model with an effective smoothing algorithm to address the flickering challenge of class changes in expression recognition. The model has a fair good accuracy of 71.1%. This is in line with other evaluation components, including precision of 72.1%, recall of 71.1%, and F1-score of 71.4%. Future work will explore the integration of multimodal data sources, such as audio and text, to improve the accuracy and richness of emotion analysis. It is hoped that this system will be able to aiming to improve event experiences and customer engagement in various sectors, such as education, technology, health, etc.

Publication

• Face Recognition for Automatic Border Control: A Systematic Literature Review Journal (https://doi.org/10.1109/ACCESS.2024.3373 264)

• Comparative Analysis of FaceNet and ArcFace in Minimizing False Positives for Enhanced Access Control Security (https://doi.org/10.1109/ICISS62896.2024.1 0750931)

• Face Morph Detection: A Systematic Review (https://doi.org/10.1109/ICISS55894.2022.9 915233)