Ayu Purwarianti

STEI-ITB

Abstract

In global era such as now, English language capability is an important skill for existing and future workforce in Indonesia. This phenomena drives the availability of various commercial English language learning tools, which is not affordable for lower-middle Indonesia economic groups. In NLP (natural language processing), people start using GLM (generative language model) to learn language, especially for English language.

Our research combines the usage of GLM and common message application available on handphone to make the English language learning affordable for most people in Indonesia. We collected English learning materials and exams as the resource for our Intelligent Tutoring System for English learning. We’ve built and deployed the modules where user can access it by Telegram message application. We also conducted user survey to analyze people opinion on our English learning module.

Survey results indicate the following quantitative values: SUS score of 90, SEQ score of 6.89, a helpfulness rating of 4.39 out of 5, a friendliness rating of 4.61, and a conciseness score of 4.00. Although these numbers show that the chatbot greatly meets user needs, there is a room for improvement in content presentation, relevance of example materials, time needed to generate response in conversation, and language usage in some sections.

Keyword: English language learning, Intelligent Tutoring System, Generative Language Model.

Introduction

English proficiency is essential for communication, active participation in the global environment, and enhancing understanding in various fields, which are often available in English-language literature. However, according to data from Education First in 2022, Indonesia ranked 81st out of 111 countries surveyed on English proficiency, scoring 469 out of a total score of 800. This indicates that Indonesia still has a low level of English proficiency.

With the rapid advancement of technology, many applications can be utilized to address this issue. One such application is the Intelligent Tutoring System (ITS). An Intelligent Tutoring System is a computer system designed to provide customized instruction or feedback to learners without requiring a teacher’s intervention (Psotka and Mutter, 1988).

The ITS application for English language learning utilizes GLM to build a chatbot that can serve as an assistant and tutor for learners. The chatbot can function as a provider of materials, exercises, or a daily conversation partner to help learners improve their English skills.

This research is conducted to analyze, develop, and experiment with GLM models in ITS for English language learning.

Research Method

Below is the description of each phase in our researches:

1. Application flow design

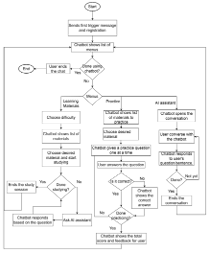

We designed the English language learning application flow to be implemented in our module on Telegram, such as shown in Picture 1

2. Data Preparation

We collected learning materials from 55 websites on English grammar and vocabulary topics. The quality of the collected English learning article data was evaluated through article categorization, material categorization, and data selection. The final result consisted of 95 articles grouped into five levels of difficulty: beginner, elementary, pre- intermediate, intermediate, and upper- intermediate.

3. Model Development & Evaluation

Experiments were conducted to select an open-source GLM-based model for ITS. Among four GLM model candidates, LLama 3 (7B) was chosen as the main model due to its balance between performance and computational efficiency, fine-tuning capabilities, and ability to provide relevant responses in language learning. The chatbot was implemented on Telegram platform using Flask framework and a PostgreSQL database. The chatbot was then evaluated through user survey using usability testing to assess its alignment with user needs.

Discussion and Result

The implemented chatbot features three main functions: learning materials, solving practice questions, and AI conversation. During usability testing, these menus were used in 9 testing scenarios conducted by 2 respondents. The respondents then provided comments and ratings on the usability (user satisfaction) and conciseness of the chatbot.

Based on the usability testing results, the following quantitative scores were obtained: a SUS score of 90, an SEQ score of 6.89, a helpfulness rating of 4.39 out of 5.00, and a friendliness rating of 4.61 out of 5.00. These scores indicate that the chatbot’s design meets user needs; however, there is feedback for improving the chatbot’s implementation. Suggestions include refining the presentation of content by the chatbot, adjusting example sentences in the material to match the difficulty level, reducing response time in AI conversations, and modifying the language that remains stiff in the material and practice menus.

Meanwhile, the chatbot’s conciseness was rated at 4.00 out of 5.00. The chatbot was deemed capable of providing clear answers to user questions in a concise manner. However, the character limit on Telegram messages resulted in some parts of lengthy responses being cut off, which affected the overall conciseness.

Conclusion

In this study, an ITS module was designed and developed utilizing GLM to assist English language learning in Indonesia. The ITS module was implemented as a chatbot accessible through the Telegram platform, leveraging the LLama 3 (7B) model, the Flask framework with Redis, and a PostgreSQL database. The chatbot is implemented with five menus, including three main features for English language learning: a feature for studying materials, a feature for completing exercises, and a feature for engaging in conversations with AI.

The chatbot was tested using usability testing methods to measure its usability against user needs and to gather comments or suggestions for future development. The testing used quantitative metrics, resulting in an average SUS score of 90, an average SEQ score of 6.89, an average helpfulness score of 4.39 out of 5.00, an average friendliness score of 4.61 out of 5.00, and an average conciseness score of 4.00 out of 5.00. Based on these figures, the chatbot is deemed to meet user needs and provide clear, user-friendly responses. However, these figures are only initial values, and there is still room for improvement. These improvements include presenting content in a more engaging format, tailoring sentence examples in the material to the appropriate level, reducing the necessary time to generate responses in conversation, and using less rigid language in the chatbot’s material and exercise menus.