Nana Sutisna, S.T., M.T., Ph.D.

Sekolah Teknik Elektro dan Informatika

Dr. Eng. Infall Syafalni, S.T., M.Eng.

Sekolah Teknik Elektro dan Informatika

Nur Ahmadi, S.T., M.Eng., Ph.D.

Sekolah Teknik Elektro dan Informatika

Prof. Trio Adiono, S.T., M.T., Ph.D.

Sekolah Teknik Elektro dan Informatika

Abstract

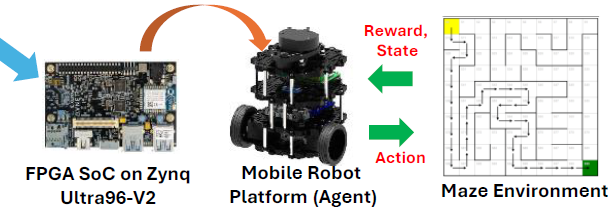

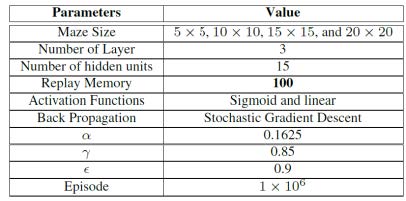

Currently, the development of artificial intelligence (AI) is increasing rapidly. With the increasing system complexity such as dynamic environments and large state-action space (continuous state and actions), as well as the requirement for high computing speed, conventional Reinforcement Learning techniques such as Q-Learning face limitations in terms of accuracy and computing speed since the Q-value update process is performed based on comparing the Q-values in every state. To overcome this problem, the Deep Reinforcement Learning (DRL) technique is adopted to perform Q-value calculation and policy optimization. DRL implementation based on the Deep Q-Network (DQN) algorithm has several major issues, specifically: (1) large memory access, (2) different data flows for the Q-value update process and policy optimization, and (3) the need for a flexible DQN architecture to increase the efficiency of resource utilization and computing workload. In this research, a dedicated hardware design (accelerator) has been developed to obtain efficient and optimum performance. To achieve this target, a system is designed with a special hardware architecture (Application Specific Integrated Circuits/ASIC) by leveraging system-on-chip (SoC) architecture. In addition, the HW/SW co-design methodology can also be used to allow flexibility and reconfigurability. Furthermore, these features can be used in design space exploration to obtain optimum system parameters quickly. Hardware optimization for low-complexity design is considered by involving several LSI design techniques such as exploiting shared hardware, employing approximate computing, and optimizing the number of layers. These optimizations result in an efficient design and allow the system can be used in edge computing systems, such as mobile robots.

Keyword: Reinforcement Learning, Deep Q-Network, Hardware Accelerator, System on Chip, FPGA

Introduction

Reinforcement Learning (RL) has been applied in various real-world application. However, the implementations face challenges due to the large size of state spaces and complex reward formulas.

To address these challenges, deep Reinforcement Learning (DRL) which integrates RL and deep learning, has gained attraction since it can handle the continuous states and actions. In DRL, a neural network models the agent’s decision-making process, mapping environmental states to actions, and further learns an optimal policy that maximizes expected cumulative rewards. This adaptability allows DRL to excel in dynamic and complex environments.

Implementing DQN in real-world applications, specifically for edge computing targets has main challenges due to resource limitations. The complexity of DQN architectures, particularly in handling neural networks, leads to increased chip area usage while power savings remain modest. Currently, DQN accelerators for edge computing are in their early stages, focusing on more straightforward solutions and resulting in an inefficient performance of accelerator design. To overcome such problems, in this research, we propose a lightweight design capable of training and inference on a compact Processing Element (PE) as well as a scalable design with support of configurable parameters, including the learning rate, discount factor, the number of input and hidden layers, and approximation parameters for the sigmoid activation function. The designed system furthermore is implemented in SoC architecture and employs HW/SW Co-design to enhance scalability.

Research Method

The proposed architecture has several features, including :

• Low-complexity hardware by enabling training and inference on a single configurable PE, utilizing shared resources, reducing the number of layers, employing Stochastic Gradient Descent (SGD) and including approximate computing techniques.

• Highly Scalable design by allowing the configuration of neural network parameters and execution of policy generation, environment, and reward functions performed by software on the Processing System (PS). This can support diverse DQN design parameters and larger environments

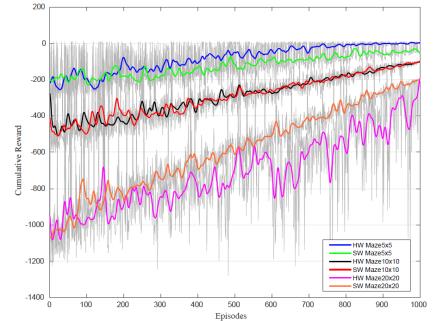

Discussion & Result

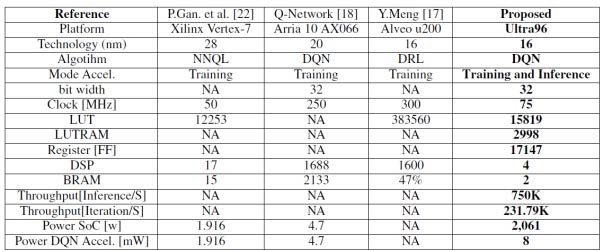

• Shared processing modules minimize chip size and power consumption, achieving an area efficiency of 44.25 IPS/1LUT and 14.65 ItPS/LUT

• The accelerator can achieve a speedup of 671x from the i7 CPU and 1106x from embedded hardware in inference mode, and a speedup of 287x from the i7 CPU and 314x from embedded hardware in training mode.

• The processing times are 1.43 μs for inference and 4.31 for training and inference, respectively.

• The designed DQN accelerator consumes power at just 8 mW at operating clock frequencies of 70 MHz.

Conclusion

• In this work, we have developed an AI accelerator architecture for Deep Q-Network (DQN) that supports the inference/training process using the same processing element.

• The proposed DQN accelerator has succeeded in handling a large and dynamic number of states using SoC architecture.

• Real-time performance can be obtained by achieving an acceleration ratio up to 671 times for inference and 287 for training mode, and at the same time maintaining lower power consumptions for about 8 mW.

• The designed system has the potential to be deployed in edge platforms and real-world applications, such as path planning/smart navigation in mobile robots.