Daffa Farros AlfarobbySTEI

STEI-ITB

Aulia RoyyanSTEI

STEI-ITB

Jabar Nur Muhammad

STEI-ITB

Abstract

This study explores the integration of Neural Networks (NN) and Genetic Algorithms (GA) to enhance Service Level Agreements (SLAs) in telecommunication infrastructure, particularly in Indonesia’s 3T regions (Tertinggal, Terdepan, dan Terluar). The research focuses on leveraging NNs for identifying data patterns and GA for optimizing SLA performance. Through a combination of Long Short-Term Memory (LSTM) models and cloud-based platforms, this framework offers a solution to inefficiencies in data processing and manual analysis. Results demonstrate significant potential for improving SLA monitoring, reducing troubleshooting efforts, and fostering equitable digital access in underserved areas.

Keyword: Neural Networks; Genetic Algorithms; SLA Optimization; LSTM; Telecommunications; Big Data; AI in 3T Regions.

Introduction

Service Level Agreements (SLAs) in telecommunications are essential for establishing clear expectations regarding service quality, responsibilities, and performance metrics between providers and customers. These agreements face challenges such as infrastructure limitations in remote areas and the necessity for advanced monitoring systems. The cases of NMS A and NMS B exemplify the complexity of SLAs, which include guarantees on network uptime, internet speed, response times, and compensation structures. The landscape of SLA management has evolved significantly due to advanced computational techniques like Neural Networks (NN) for data processing and pattern recognition, Genetic Algorithms (GA) for optimization, and Long Short-Term Memory (LSTM) models for time-series forecasting. These technologies enable telecommunications providers to enhance predictive accuracy, real-time monitoring, and proactive service optimization, marking a substantial advancement in the management of SLAs.

Research Method

To develop a reliable SLA optimization framework, data was collected from Network Monitoring Systems (NMS) proceed to Data Cleaning to preprocess raw data by removing inconsistencies and null values for compatibility with machine learning models; Feature Engineering to select and normalize relevant features, including time-series metrics like uptime and service availability trends. A Recurrent Neural Network (RNN) architecture with Long Short-Term Memory (LSTM) layers was implemented, testing six configurations.

• Model A1: 64-32-16 layers

• Model A2: 128-128-64 layers

• Model A3: 256-256-128 layers

• Model B1: 64-32 layers

• Model B2: 128-128 layers

• Model B3: 256-256 layers

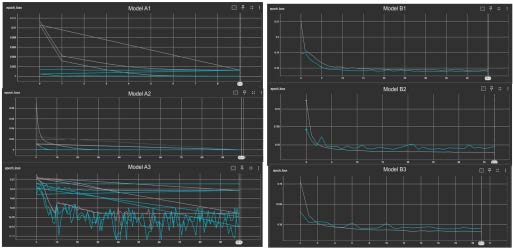

The 3-layer models (A1, A2, A3) are designed to handle complex patterns and higher capacity tasks, making them suitable for learning long-range dependencies, though they may incur increased computational costs and a higher risk of overfitting. In contrast, the 2-layer models (B1, B2, B3) are optimized for simpler temporal dependencies, providing lower computational costs and faster training times while effectively capturing essential temporal dynamics with a reduced risk of overfitting.

The system design comprised three subsystems: a Python-based backend deployed on Google Cloud Platform (GCP), a React-based frontend for real-time SLA monitoring, and a TensorFlow-developed prediction model for SLA forecasting.

Implementation

The model training process involved using TensorFlow to train the models on a clean dataset, incorporating validation to monitor for overfitting and underfitting. Performance was assessed using metrics such as Mean Absolute Error (MAE), Mean Square Error (MSE), Root Mean Square Error (RMSE), and Coefficient of Determination (R²). Concurrently, a responsive web dashboard was developed to display SLA predictions, uptime/downtime metrics, and restitution trends. For deployment, the trained model was implemented on Google Cloud Platform (GCP) using TensorFlow Serving to ensure scalability, while the web application was hosted on a cloud platform for easy stakeholder access. The system’s performance was evaluated based on the aforementioned metrics, which served as fitness functions for assessing model accuracy

Discussion & Result

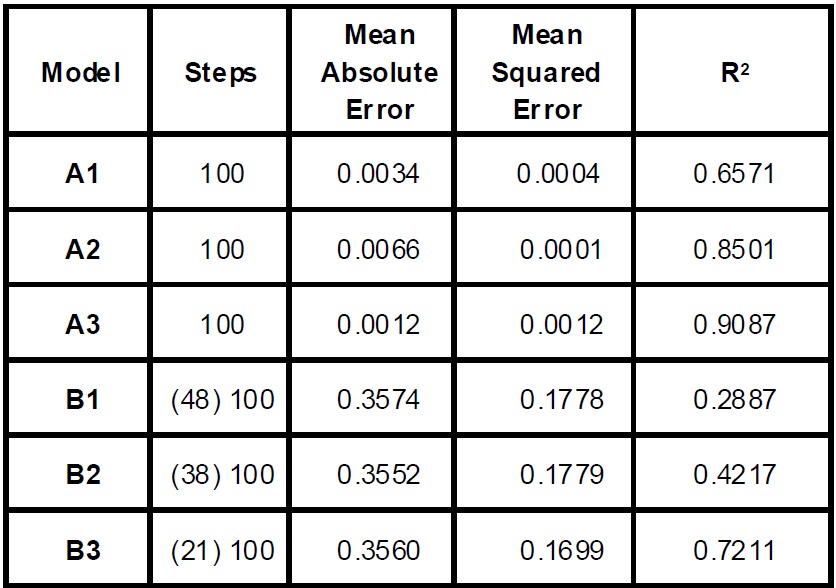

The development of three LSTM-based predictive models (A1, A2, A3, B1, B2, & B3) yielded varied outcomes in terms of Mean Absolute Error, Mean Squared Error, and Coefficient of Determination.

The results of the LSTM model architectures reveal important performance trade-offs for predictive tasks based on evaluation metrics. Three-Layer Models (A-Series): The A-series models (A1, A2, A3) show improved performance with increased capacity, indicated by lower MAE and MSE values and higher R² scores. Model A3, with the largest configuration achieves the best performance demonstrating its ability to capture complex temporal dependencies. However, this complexity raises the risk of overfitting, necessitating careful regularization. Two-Layer Models (B-Series): The B-series models (B1, B2, B3) are less complex but exhibit a significant performance gap compared to the A-series, with higher MAE and MSE values and lower R² scores. While increasing capacity within the B-series improves performance, these models struggle to capture intricate patterns.

Conclusion

Among the tested models, Model A2 stands out as the most reliable configuration, achieving a balance between accuracy and computational efficiency. Future improvements will involve integrating more diverse datasets and advanced machine learning techniques to further enhance the system’s reliability and adaptability. The successful implementation of this solution signifies a notable advancement in optimizing telecommunications infrastructure, ultimately leading to improved service delivery and increased customer satisfaction.